Accelerating the Adoption of AI Models

In collaboration with industry, Peter Darveau P. Eng., contributed to this project to develop an open-source machine learning (ML) model for identifying and analyzing root causes in plant operations at a facility. The project is part of Stage 2 of their AI maturity framework. Hexagon Technology in Oakville Ontario educates about and develops transformative AI solutions to bridge human and machine collaboration. In this post, we discuss how time-consuming root cause analysis can be reduced using a machine learning model as an alternative to the troubleshooting and analysis methods that come with legacy controllers and software.

Controls systems in place today work mostly on binary logic (1s and 0s) or some basic form of linear control incorporating proportional gain and possibly an offset. In some cases, such as process control, the rate of change of the output compared to the rate of change of the input is factored in to stabilize an otherwise unstable output. While the various operations and sequences perform their respective tasks well independently from each other, the function of the controller as a whole is ignored. Advances in neural networks theory, IIoT and computing technology offer new ways of getting more out of legacy controllers.

What is the advantage of looking at the controller as a whole? We can imagine a box with many inputs and outputs. By performing statistical analysis around what the controller controls, we can visualize how our controller is performing and make transactional parameter adjustments to get the desired output performance. This is generally what Statistical Process Control (SPC) does. But doing it this way involves an external control method and in no way does this mean we have an optimized model of all the control sequences taking into account the sensitivities to all the inputs.

Developing an optimized model of the controller will only be beneficial if we can optimize the box with some method of error correction; that is to say to optimize the box such that the errors between what is expected at the outputs and what is observed are minimized. This is where neural networks come into play.

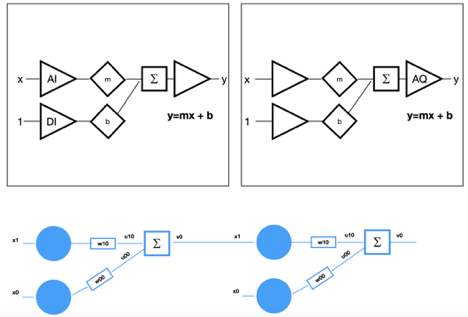

It can be shown that a series of control sequences can be represented as a neural network and is related to the most iconic model of all: linear regression given by the algebraic formula familiar to all: y = mx + b, a straight line. By cascading the output from each linear regression model layer (function) to the next, we obtain a simple network.

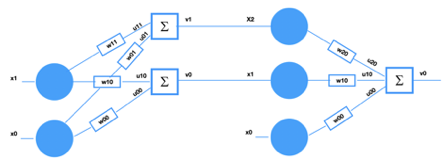

By replacing and adding some nomenclature to keep track of everything, we turned a straight line equation into a mathematical network. The diagram flows unidirectionally from inputs to the outputs. Adding more functionality creates a more complex network as shown below:

It is important to note in this example that no matter how we analyze or modify this model, the output(s) will always behave according to a straight line and this is explains why the model represented by all control sequences running in a typical controllers can’t be optimized.

In another post, I will explain how this problem can be resolved with the introduction of an activation function in the network. It is this function that shapes the output in a non-linear (curve) fashion allowing the network to perform error minimization by factoring in the sensitivity of the change of the outputs to the change of the inputs in the network.

AI systems perform at or above human-level for many specialized tasks. This includes tasks that were never before possible with written rules or software, such as recognizing and categorizing millions of states. Advances in technology such as with Intel’s Xeon processors, Movidius NCS and OneAPI framework make that possible.

The key to AI maturity is envisioning what the end-state could look like and seek support to aid with a clear path to that vision from the current state. At Hexagon Technology, we are inspired by the potential of AI. We’ve also been privileged to go on this transformational journey with business leaders to help them understand the promise of AI to create the future of industry, services, customer experiences and the environment.

References:

[1] Darveau, P. Prognostics and Availability for Industrial Equipment Using High Performance Computing (HPC) and AI Technology. Preprints 2021, 2021090068 .

[2] Darveau, P., 1993. C programming in programmable logic controllers.

[3] Darveau, P., 2015. Wearable Air Quality Monitor. U.S. Patent Application 14/162,897.